How to Deploy and Scale your Rails application with Kamal

Guillaume Briday

7 minutes

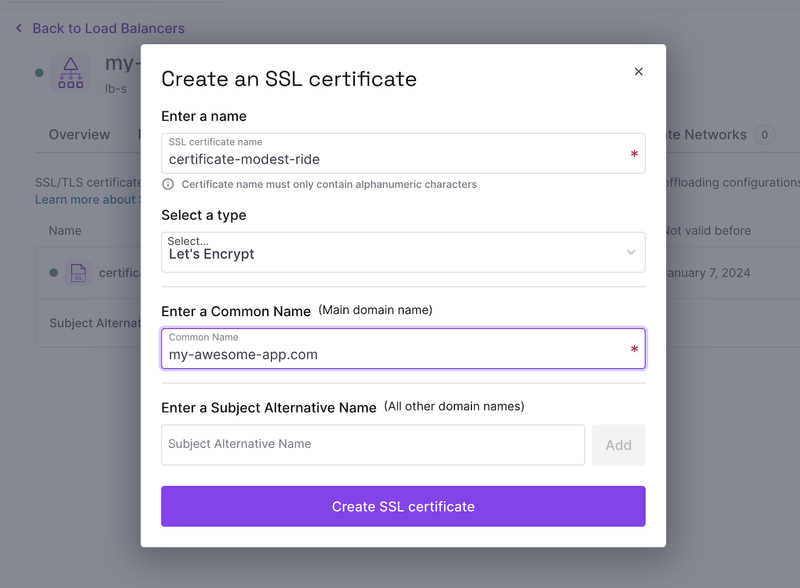

For bigger projects, it might be a good idea to split the workload among multiple servers based on their specific purposes.

For instance, in our case, we would like one server for our database, another one for Redis, one for our background job processor like Sidekiq, and multiple servers for our Rails application to share the requests between them and respond as fast as possible.

Paradoxically, it's actually easier to deploy our application on multiple hosts rather than on a single one, because we don't have to worry about Docker private networks and Traefik SSL certificates, but it costs more money.

Because we have multiple servers that need to respond to the same URL, we need a Load Balancer. Most of them manage SSL certificates automatically for you so you don't have to do anything.

ℹ️ Most of the configuration and concepts, will remain the same compared to the previous post, you should read it before this one.

Before starting

Let's see what our infrastructure would look like on a diagram.

Every single element on this diagram lives on its own server. Even if Kamal makes it easy to deploy it, you still have to manage it. Keep that in mind when considering the use of this type of architecture.

Ensure that you actually need it instead of deploying everything on a single server.

1. Prepare your servers

In this example, we will have 5 servers:

- 2 for Ruby on Rails

- 1 for Sidekiq

- 1 for PostgreSQL

- 1 for Redis

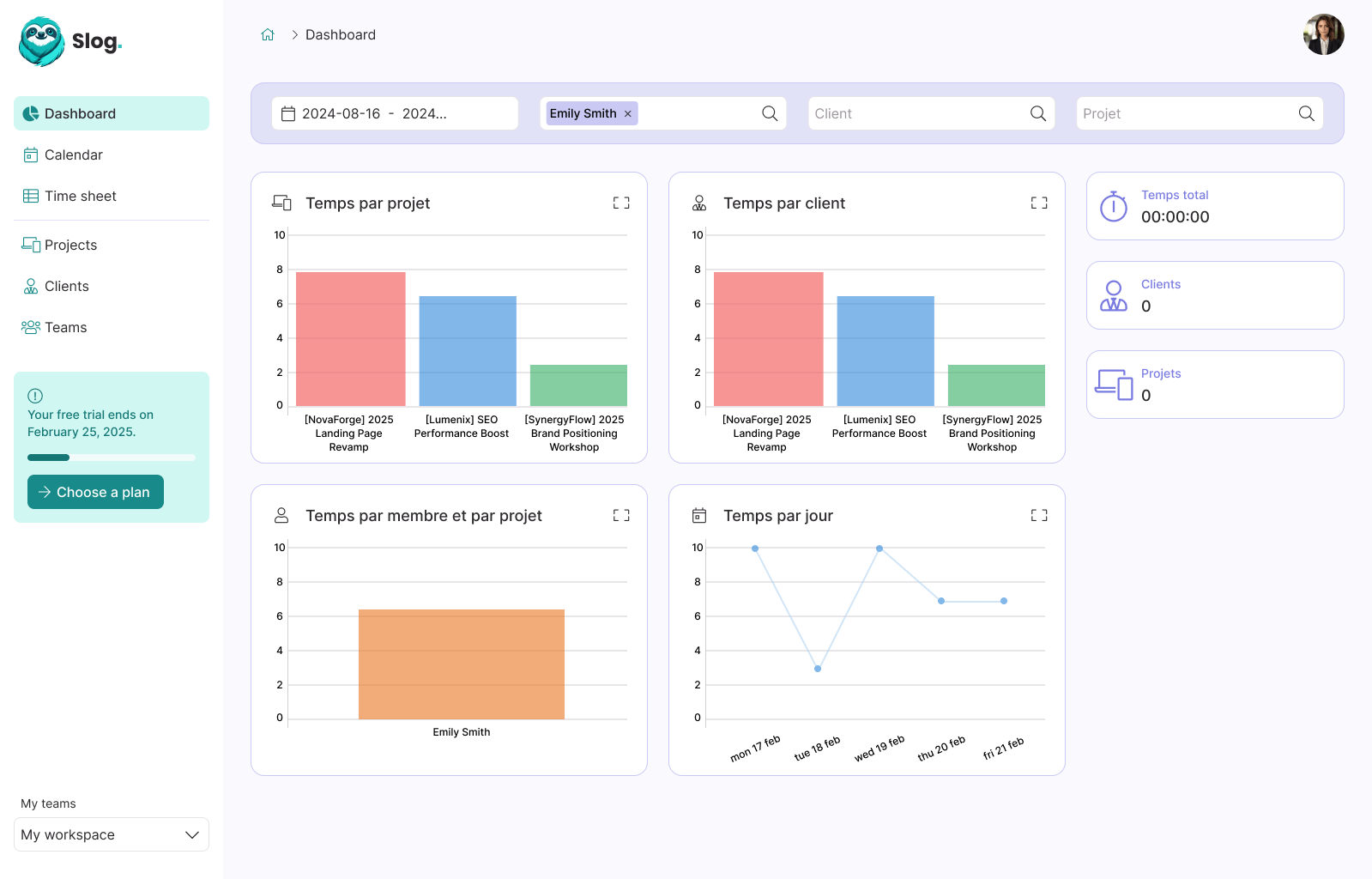

I named it accordingly to their purpose because it will be helpful to find them in the Scaleway dashboard, but for Kamal we only need their IP address.

They are all hosted on the same provider to limit network latency between data centers, but you could totally host any server anywhere, it does not matter. We could even split it between different providers.

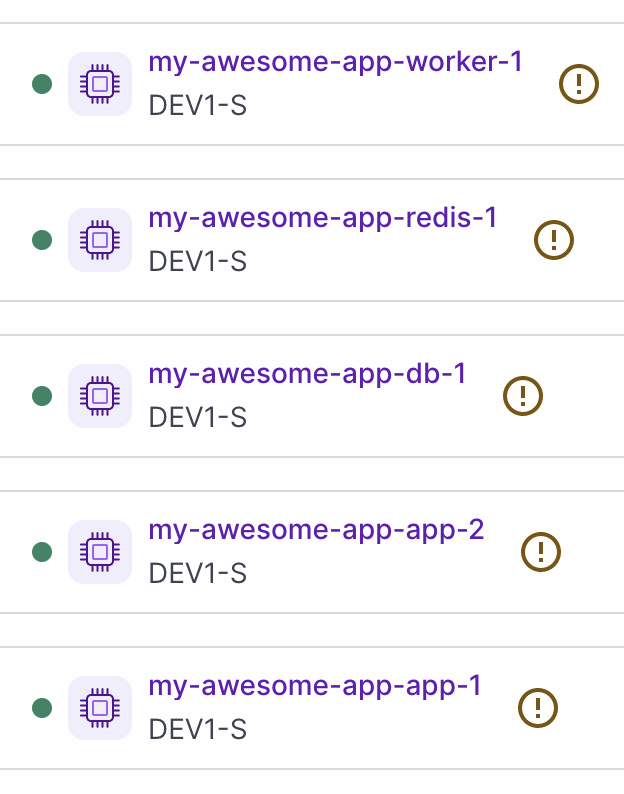

Because it can be very repetitive and error-prone, I use my Ansible Playbook guillaumebriday/kamal-ansible-manager to configure all the servers at once. I only have to set all the IP addresses in the hosts.ini file and run ansible-playbook -i hosts.ini playbook.yml to update and secure all the servers.

🔒 To improve security, you could also configure UFW to only allow required ports based on the service the servers will host. For instance, PostgreSQL does not need to have port 443 opened; you could deny it on this specific server.

2. Update the configuration files

The configuration is very similar to the previous one, but we need to adjust few things.

Servers

Let's configure our servers:

servers:

web:

hosts:

- 192.168.0.1

- 192.168.0.2

job:

hosts:

- 192.168.0.3 # Notice that is not the same as in `web`

cmd: bundle exec sidekiq -q default -q mailersBecause there is no more Traefik and Docker network configuration, it is much easier to read, isn't it?!

Accessories

Now let's configure our accessories with the correct directories so that the files are saved on the disk and won't be removed between deployments.

accessories:

db:

image: postgres:16

host: 192.168.0.4

port: 5432 # Don't forget to expose the port

env:

clear:

POSTGRES_USER: "my_awesome_app"

POSTGRES_DB: "my_awesome_app_production" # The database will be created automatically on first boot.

secret:

- POSTGRES_PASSWORD

directories:

- data:/var/lib/postgresql/data

redis:

image: redis:7.0

host: 192.168.0.5

port: 6379 # Don't forget to expose the port

directories:

- data:/dataEnvironment variables

env:

clear:

RAILS_SERVE_STATIC_FILES: true

POSTGRES_USER: "my_awesome_app"

POSTGRES_DB: "my_awesome_app_production"

POSTGRES_HOST: 192.168.0.4 # Same as the db accessory

REDIS_URL: "redis://192.168.0.5:6379/0" # Same as the redis accessory

secret:

- RAILS_MASTER_KEY

- SLACK_WEBHOOK_URL

- GOOGLE_CLIENT_ID

- GOOGLE_CLIENT_SECRET

- CLOUDFRONT_ENDPOINT

- POSTGRES_PASSWORDDon't forget to add your environment variables as well.

We need to update config/database.yml file to match our configuration in deploy.yml:

default: &default

adapter: postgresql

encoding: unicode

# For details on connection pooling, see Rails configuration guide

# https://guides.rubyonrails.org/configuring.html#database-pooling

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

production:

<<: *default

username: <%= ENV["POSTGRES_USER"] %>

password: <%= ENV["POSTGRES_PASSWORD"] %>

database: <%= ENV["POSTGRES_DB"] %>

host: <%= ENV["POSTGRES_HOST"] %> # Because we don't use DATABASE_URL, we need to add this line.And use Redis as cache store in config/environments/production.rb:

config.cache_store = :redis_cache_store, { url: ENV.fetch('REDIS_URL') }No need to update Sidekiq, it will use REDIS_URL by default.

3. Backup to external a host or S3-compatible Object Storage service

As seen in the previous post, we need to back up our database. I choose to run this accessory on the first application server, but you can virtually run it anywhere you want, even on its own server if needed.

accessories:

# ...

s3_backup:

image: eeshugerman/postgres-backup-s3:16

host: 192.168.0.1 # Same as the first web host

env:

clear:

SCHEDULE: "@daily"

BACKUP_KEEP_DAYS: 30

S3_REGION: your-s3-region

S3_BUCKET: your-s3-bucket

S3_PREFIX: backups

S3_ENDPOINT: https://your-s3-endpoint

POSTGRES_HOST: 192.168.0.4 # Same as the db accessory

POSTGRES_DATABASE: my_awesome_app_production

POSTGRES_USER: my_awesome_app

secret:

- POSTGRES_PASSWORD

- S3_ACCESS_KEY_ID

- S3_SECRET_ACCESS_KEYDon't forget to add the environment variables in your .env and adapt other variables according to your S3 configuration.

3. Configure a load balancer

This is the principal difference compared to the previous configuration we had on a single host. We have to use a Load Balancer, it is mandatory to share the requests between different application servers.

Let's see how it works in Scaleway, but it would be very similar on any Load Balancer provider like Cloudflare Load Balancing.

See their documentation: https://www.scaleway.com/en/load-balancer

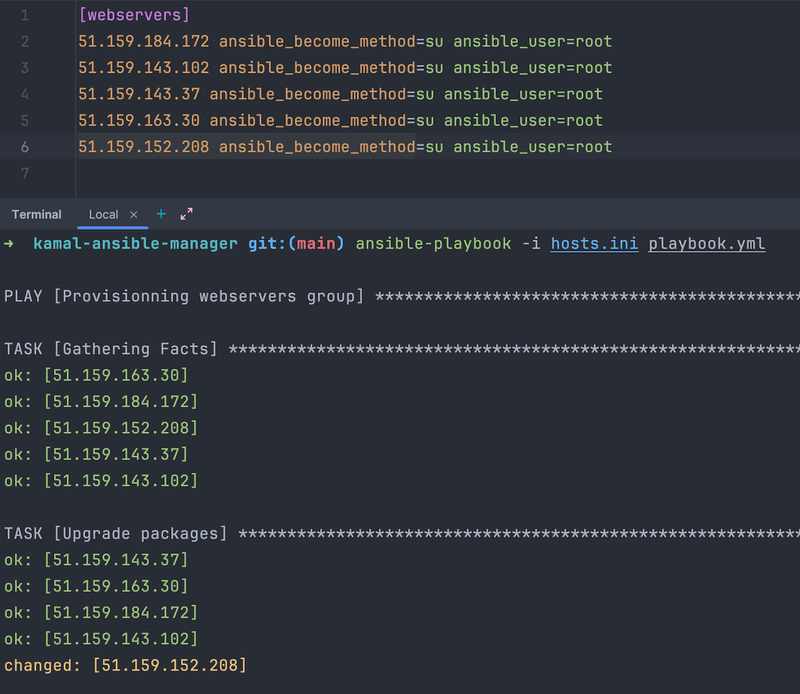

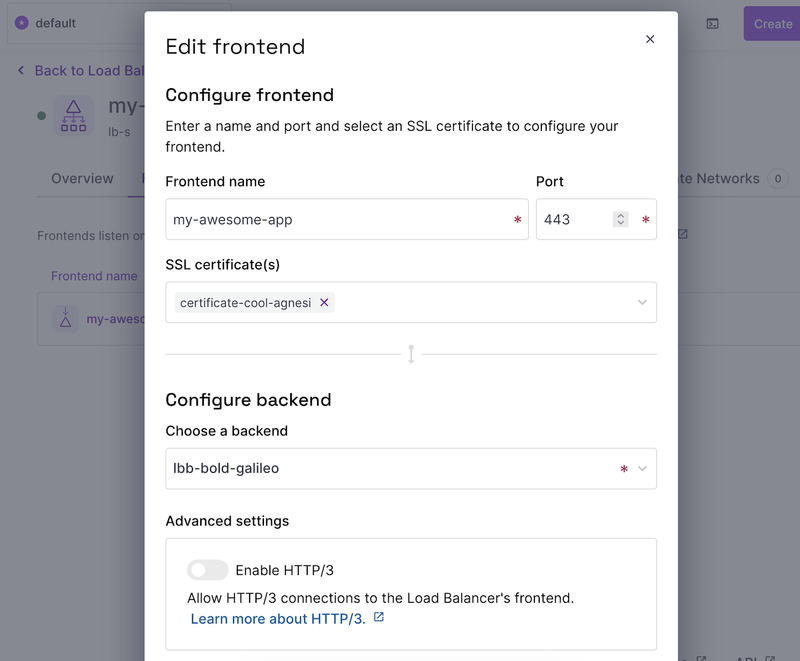

The Load Balancer can manage the SSL certificate automatically, let's add one:

We need to add a frontend to accept external connections and create an IP address that we will use to configure our DNS:

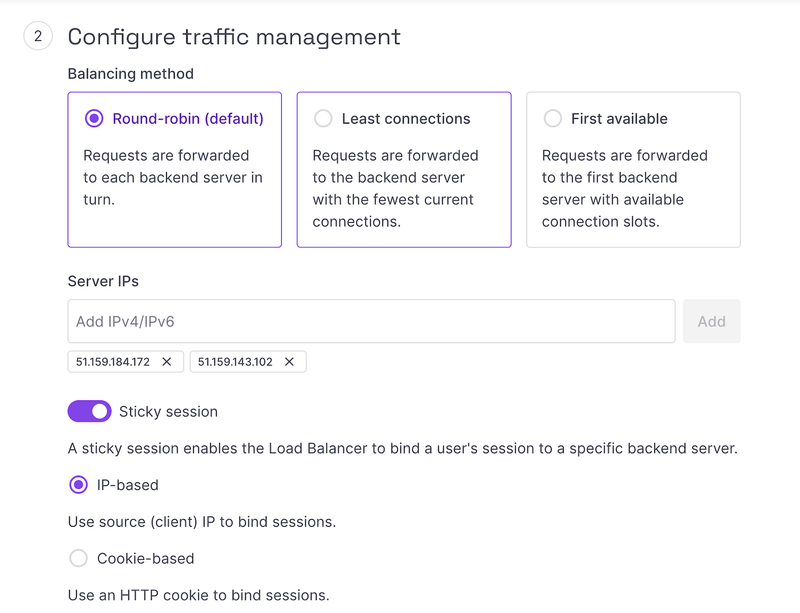

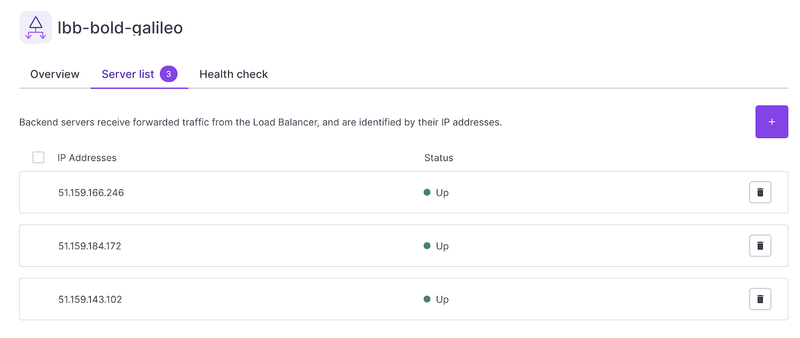

Add a backend and set all the IPs configured in our servers web section:

ℹ️ You should also adapt the Load Balancer configuration according to your needs, like the healthcheck interval or the Balancing method.

DNS Zone

Configure your DNS with the Load Balancer public IP address:

@ 86400 IN A 51.159.87.39And that's it. You should be all set! 🚀

Now you can run:

$ kamal setupAnd everything should be now up and running!

4. How to scale

With this setup, you can easily scale horizontally and vertically, up and down.

Because we have multiple servers that handle our requests, you can shut down or spin up servers at will.

This allows you to scale in two different ways. You can either use more powerful servers (vertical scaling) or spin up smaller ones (horizontal scaling).

Depending on your needs, you should use one technique or the other.

For example, let's see how to add another server to our cluster.

4.1. Create a new server

Once you created the instance on your server provider, you can add the IP to your Ansible hosts.ini and run the playbook (if you are using Ansible).

Besides that, you "just" have to add the new IP to the list of servers in your deploy.yml:

servers:

web:

hosts:

- 192.168.0.1

- 192.168.0.2

- 192.168.0.7 # This is the new serverPush the environment variables to the servers, including the new one:

$ kamal env pushInstall Docker on the new server if not already done:

$ kamal server bootstrapAnd deploy:

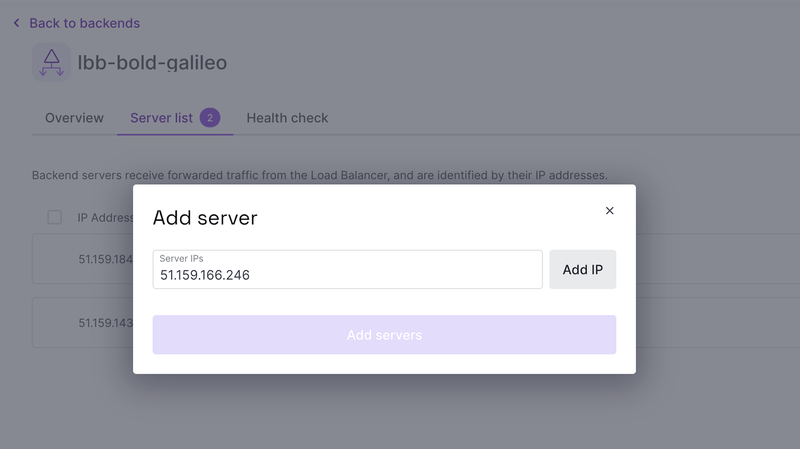

$ kamal deploy4.2. Add it to our Load Balancer

Add the new server to the Load Balancer

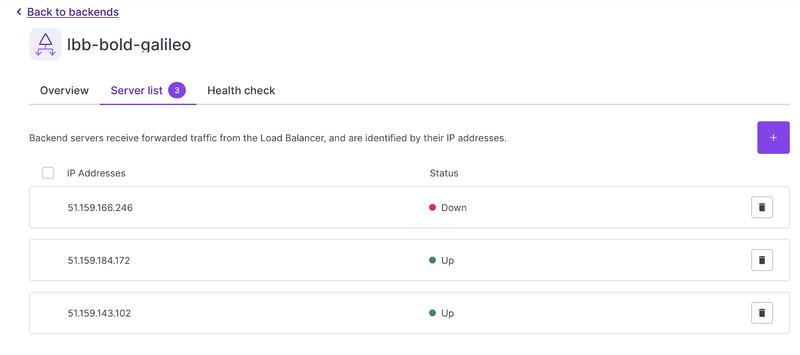

And now, we just have to wait until the server became ready to accept requests:

And voilà! Easy as that! You now have 3 servers up and running to handle more requests.

5. Put everything together

Let's put it all together and we should be good to go

See the complete deploy.yml:

# Name of your application. Used to uniquely configure containers.

service: my_awesome_app

# Name of the container image.

image: my_awesome_app

# Deploy to these servers.

servers:

web:

hosts:

- 192.168.0.1

- 192.168.0.2

- 192.168.0.7

job:

hosts:

- 192.168.0.3

cmd: bundle exec sidekiq -q default -q mailers

# Credentials for your image host.

registry:

# Specify the registry server, if you're not using Docker Hub

# server: registry.digitalocean.com / ghcr.io / ...

username: guillaumebriday

# Always use an access token rather than real password when possible.

password:

- KAMAL_REGISTRY_PASSWORD # Must be present in your `.env`.

# Inject ENV variables into containers (secrets come from .env).

# Remember to run `kamal env push` after making changes!

env:

clear:

RAILS_SERVE_STATIC_FILES: true

POSTGRES_USER: "my_awesome_app"

POSTGRES_DB: "my_awesome_app_production"

POSTGRES_HOST: 192.168.0.4

REDIS_URL: "redis://192.168.0.5:6379/0"

secret:

- RAILS_MASTER_KEY

- SLACK_WEBHOOK_URL

- GOOGLE_CLIENT_ID

- GOOGLE_CLIENT_SECRET

- CLOUDFRONT_ENDPOINT

- POSTGRES_PASSWORD

# Use accessory services (secrets come from .env).

accessories:

db:

image: postgres:16

host: 192.168.0.4

port: 5432

env:

clear:

POSTGRES_USER: "my_awesome_app"

POSTGRES_DB: "my_awesome_app_production"

secret:

- POSTGRES_PASSWORD

directories:

- data:/var/lib/postgresql/data

redis:

image: redis:7.0

host: 192.168.0.5

port: 6379

directories:

- data:/data

s3_backup:

image: eeshugerman/postgres-backup-s3:16

host: 192.168.0.1

env:

clear:

SCHEDULE: "@daily"

BACKUP_KEEP_DAYS: 30

S3_REGION: your-s3-region

S3_BUCKET: your-s3-bucket

S3_PREFIX: backups

S3_ENDPOINT: https://your-s3-endpoint

POSTGRES_HOST: 192.168.0.4

POSTGRES_DATABASE: my_awesome_app_production

POSTGRES_USER: my_awesome_app

secret:

- POSTGRES_PASSWORD

- S3_ACCESS_KEY_ID

- S3_SECRET_ACCESS_KEY

healthcheck:

interval: 5s6. Conclusion

Effectively managing your servers becomes a breeze with the implementation of load balancing. Consider vertical scaling? Just destroy your smaller server and spin up a bigger one. Alternatively, if horizontal scaling is your goal? Just bring in more servers, just like we did.

In principle, load balancing serves as a robust strategy to minimize outages since there should always be at least one operational server, making it a compelling choice for infrastructure.

But with great powers come great responsibility! 🕷️🕸️

When it comes to scaling applications, it is all about compromise. You cannot add more servers indefinitely even if it's easy.

Scaling an application is really (really) hard and cannot be summarized as "adding more servers.". The good news is that you probably don't need it.

But that topic is for another blog post.

📢 This post is part of a series on Kamal

- Easy, Perfect for side/small projects: How to deploy Rails with Kamal and SSL certificate on any VPS

- Medium: Perfect for most projects: How to deploy Rails with Kamal, PostgreSQL, Sidekiq and Backups on a single host

- Expert: Perfect for big projects: How to Deploy and Scale your Rails application with Kamal