Docker: Improving Performance with Caching

Guillaume Briday

2 minutes

Today, we’ll discuss how Docker works on macOS and how to optimize its performance using caching.

Before diving deeper, it’s important to understand how Docker operates on macOS and Windows.

Docker runs only on the Linux operating system; it doesn’t work natively on macOS or Windows. To use Docker on macOS, the Docker for Mac application installs a minimalist Linux system in a virtual machine using macOS's native hypervisor, available since OS X Yosemite (10.10), and HyperKit. A similar approach is used on Windows with Hyper-V.

Docker and Docker Compose are installed directly in this virtual machine. All commands, networks, and data are automatically transferred between the host and the virtual machine. More details can be found in Anil Madhavapeddy's official article.

The transfer of data between your host and the virtual machine is handled by osxfs. Unfortunately, osxfs suffers from significant performance issues. The developers are well aware of these problems and have started implementing solutions. The linked article explains clearly why these issues exist on macOS.

Caching has been introduced as a potential workaround while waiting for osxfs to be updated to provide Linux-like performance. Indeed, the performance of a containerized application on Linux is nearly identical to that on a Linux host.

There are three possible caching options:

-

delegated: Data in the container takes precedence over the host, causing a delay between changes in the container and their reflection on the host. This improves write speeds. -

cached: Data on the host takes precedence over the container, causing a delay between changes on the host and their propagation to the container. This improves read speeds. -

consistent: The default option, ensuring perfect consistency between host and container data.

There’s no universally better option—it depends on your specific needs.

As noted in the documentation, the delegated option is available but not yet fully mature, so don’t expect a major performance difference compared to cached.

Caution! Both delegated and cached can lead to data loss in case of issues.

Fortunately, perfect data consistency isn’t always required. For instance, in my development environment, I often read data from the database but write to it far less frequently. Prioritizing read performance at the expense of consistency is acceptable in this context.

In production, this isn’t an issue as I use a Linux environment. However, I’d leave the default behavior unchanged in production, as data loss isn’t acceptable.

To enable caching in your docker-compose.yml, simply add the option to the end of your volume declaration:

services:

blog-server:

volumes:

- - ./:/application

+ - ./:/application:cachedOr directly with Docker, for example:

$ docker run -v $(pwd):/application:cached -d laravel-blogA few tests

These tests aren’t scientific or highly precise but serve to provide an idea of the performance difference, which is noticeable in practice.

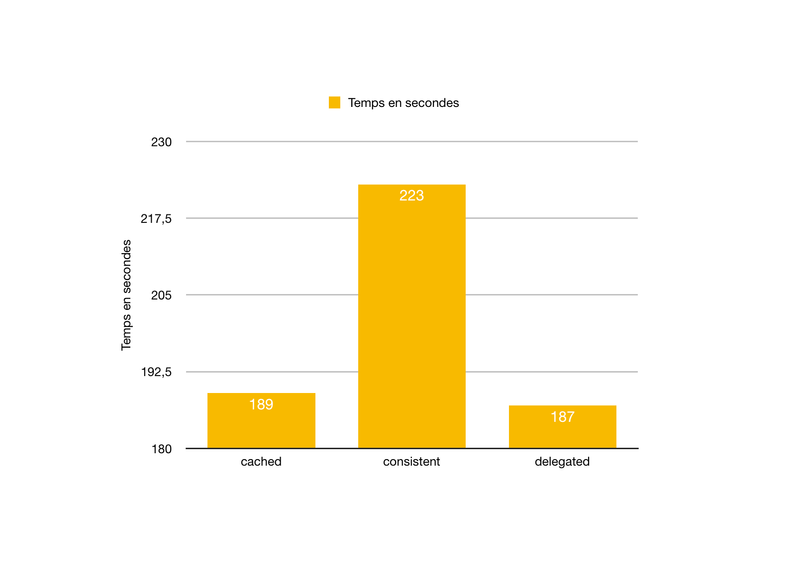

Composer install

$ docker run -it --rm -v $(pwd):/app composer/composer install

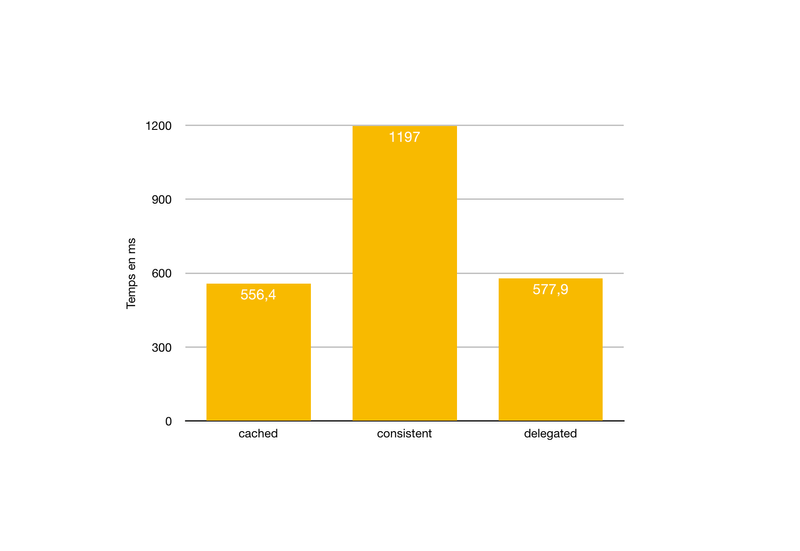

Loading a homepage

I calculated the average load time for the Laravel blog’s homepage over ten requests:

A significant improvement can be observed when using cached and delegated. Load times decreased by almost 54%.

I hope further improvements will come soon. For now, this seems like a good compromise.

Thank you!